What agentic AI actually is: a deeply researched and definitive explanation

The hype free, deeply researched explanation of agentic AI that you’ve been waiting for. What it really is, and what it's not.

Over the past two years, but more so in the last 6 months, there’s been an onslaught of “agentic AI” rhetoric coming from every tech company and wannabe AI influencer you can think of. Apparently, it’s the next frontier in artificial intelligence, but what actually is it?

Most of the things I hear and read about agentic AI aren’t actually agentic AI, and it’s starting to do my head in. So, I’ve done the research for you and put this piece together, which properly defines agentic AI for customer experience leaders.

I’ll briefly chart the history of agentic systems, which go back much further than OpenAI would have you believe. I’ll define agentic systems according to the leading pioneers and founders of the field, back when it was known as Distributed Artificial Intelligence. I’ll then show you how each of the leading generative AI technology companies define agentic AI and AI agents, and highlight the similarities and differences.

By the end of this article, you'll be able to tell what an AI agent is, and what it's not, so that you can make better decisions about how you might (might not) develop your own AI agents.

What’s all the fuss about agentic AI?

Google, Microsoft, OpenAI and all the big dogs are claiming that agentic AI will disrupt industries. Y Combinator envisages a world in the not too distant future where a company, built on AI agents, and consisting of as little as a handful of staff, hits unicorn status. Microsoft sees a world where part of its core business (Excel, Word, PowerPoint) becomes irrelevant due to agentic AI’s absorbing most, if not all, of what those programs do. The prediction is that AI agents will consume the Software as a Service marketplace.

As well as the big dogs shouting about the Agentic AI future, you also have all of the startups and scale ups that are trying to sell Agentic capabilities to enterprises like yours, all of which now claim that you can use their platforms to build agentic AI solutions.

Add to that all of the newly crowned AI experts telling you to simply drop a comment on their LinkedIn post to be given the keys to the agentic AI castle, and you can see how this whirlwind of agentic AI madness gathers pace.

But what actually is agentic AI? What makes an AI system “agentic”? Is it simply software that automates tasks, like a traditional chatbot? Or does it require something deeper?

The need for a clear definition of agentic AI

The confusion about what agentic AI is stems, in part, from the varied and often vague definitions offered by all of the players in the field referenced above. For some, “agentic” encompasses anything from basic rule-based systems to cutting-edge generative AI. Others insist it must involve purposeful planning and goal-driven behaviour.

In 1994, Michael Wooldridge and Nick Jennings wrote the following, which encapsulates exactly where we are today, now that AI agents are hitting mainstream awareness:

“The problem is that although the term is widely used, by many people working in closely related areas, it defies attempts to produce a single universally accepted definition. This need not necessarily be a problem: after all, if many people are successfully developing interesting and useful applications, then it hardly matters that they do not agree on potentially trivial terminological details. However, there is also the danger that unless the issue is discussed, agent might become a noise’ term, subject to both abuse and misuse.”

So here we are, 30 years later (thanks David ;)) and that concern has come to pass. The word agent is now being bandied around and becoming pure noise.

Without a clear definition of agentic AI that’s understood by the mainstream, the term not just risks, but currently is being used to rebrand traditional automation as something new and groundbreaking, muddying the waters for businesses trying to understand what this technology truly offers.

History of agentic systems

As you can see from the above quote from 1994, the concept of agentic AI is not new. Not by a long shot. It might feel new because you’ve come across it for the first time recently. It might seem like it’s been thrust into the world from the marketing department of Open AI. But the concepts of software agents and multi-agent systems date back as far as the 70s. Back then, it was known as Distributed Artificial Intelligence.

Distributed Artificial Intelligence

Distributed Artificial Intelligence (DAI) is concerned with coordinating behaviour among a collection of semi-autonomous problem-solving agents. These software programs collaborate by sharing knowledge, goals, and plans to act together, solve joint problems, or make decisions; either individually or collectively.

The late Les Gasser was one of the early pioneers of DAI, with one of the first books on the topic published by Gasser and Alan Bond in 1988; Readings in distributed artificial intelligence. This book is a kind of literature review of all of the research conducted prior to 1988 on Distributed AI systems going back to the 70s.

Distributed Artificial Intelligence was later termed ‘multi-agent systems’ and, even today, the terms are often used interchangeably but both are concerned with how systems can function together to complete complex tasks.

The autonomous agent and multi-agent systems community

Since then, the field of autonomous agents and multi-agent systems has been progressing in a similar fashion to all other subfields of AI, such as speech recognition and language understanding. They each have their communities of researchers, academics, practitioners, builders and enthusiasts.

For example, The International Foundation for Autonomous Agents and Multiagent Systems (IFAAMAS) is a non-profit dedicated to promoting science and technology in the areas of autonomous agents and multiagent systems. Then you have the annual international conference on Autonomous Agents and Multiagent Systems (AAMAS) that’s been going since 2002, along with plenty of books and research published on the topic from the 70s to today.

So this shows us that the concept of agentic AI isn’t new and has existed for decades, but it doesn’t properly tell us what an agentic AI actually is. To understand this, we must define the word agent.

Defining ‘agency’

Oxford Dictionary defines agent as

“a person or thing that takes an active role or produces a specified effect.”

According to that definition, all forms of automated technology solutions ought to be classed as agents, depending on how you define ‘active role’. Arguably, Google’s search algorithms play an active role in marrying user queries with search results. Does that mean Google is one big agent? What about Netflix? Its machine learning algorithms play an active role in recommending titles to watch based on your browsing history. Is this recommendation system also an agent?

If so, is a AI agent simply a program or algorithm? It seems like we could do with a more specific definition.

Agents as actors

How about the accounting definition of agent, which is

“A person appointed by another person, known as the principal, to act on his or her behalf.”

This is closer to a definition that seems more constrained. AI agents are, after all, deployed to act on behalf of someone or something. And the concept of acting supposes that the agent is doing something. Again, though, it says nothing of the nature of the appointment or the technology used. By that definition, an entire mobile app could be classed as an agent because it’s appointed by a company to act on its behalf.

The philosophical definition of agent

How about the philosophical definition of agency then, wherein to have agency is to exhibit

“purposeful, goal directed activity (intentional action). An agent typically has some sort of immediate awareness of their physical activity and the goals that the activity is aimed at realizing. In ‘goal directed action’ an agent implements a kind of direct control or guidance over their own behavior.”

This definition starts to allude to some of the things you might expect from an AI agent. Surely an AI agent should have purpose and be designed to fulfil a goal of some kind? Having an awareness of their physical activity could easily be transposed in software to having an awareness of its environment; the tools it has access to, the input it’s receiving, the job it has to do and where to send the output. Finally, having control or guidance over their own behaviour suggests autonomy, as in deciding what to do and doing it without human involvement.

That seems fair enough, but what about the ability for AI agents to plan, reason, iterate and learn over time? What about their ability to work together to solve problems as in DAI and multi-agent systems? What about the concept of persisting over time? Are these important distinctions? Also, the above definition could technically be applied to a rule-based NLU chatbot or any software program without AI. Is the only difference that agentic AI has some kind of AI component and other forms of software agents don’t? Are those pre-determined, rule-based systems still considered agents of some kind? Or are they programs?

To pick this apart, we have to be able to describe the difference between an agent and a program.

What’s the difference between an AI agent and a program?

As luck would have it, Stan Franklin and Art Graesser in 1996 co-authored a paper that asked this exact question. In it, the authors tracked down every definition of the term software agent offered by the most reputable researchers in the industry at the time, comparing and contrasting them together, before arriving at their own synthesised definition that clearly separates agents from programs and defines agents.

Franklin and Graesser did a great job in the paper to weed out the definitions that weren’t specific enough to delineate between agents and programs, noting that

“Software agents are, by definition, programs, but a program must measure up to several marks to be an agent.”

So what are those marks?

What is the definition of an AI agent?

A software agent, according to Franklin and Graesser is

“A system situated within and a part of an environment that senses that environment and acts on it, over time, in pursuit of its own agenda and so as to effect what it senses in the future.”

Because the concept of software agents and multi-agent systems came from Distributed Artificial Intelligence, which is a subfield of Artificial Intelligence, then it stands to reason that the above definition of software agent is also a perfectly acceptable definition of AI agents or generative AI agents, to be specific. See, Generative AI is simply a subfield of Deep Learning, which is itself an subfield of AI like DAI. So what’s the difference? Technology architecture? If so, why should that matter? If I have rubber tyres and you have inflatable tyres, are they both not tyres? If my socks are made of cotton and yours are silk, are they both not socks?

The above definition seems to be a pretty sound definition to me. It distinguishes between rule-based workflows (programs), and and highlights the most important elements of agency. Any attempt to twist or reshape this definition (from 1995!) is fruitless.

What are the qualities of AI agents?

Generally speaking, in the paper, there were a few qualities that most of the definitions of AI agents shared. There was general consensus that agents should be able to:

perceive their environment,

sense inputs and stimuli,

reason about the things they sense,

plan an action or actions,

act autonomously to accomplish a specified goal

Other qualities included:

having a special purpose (narrow scope),

being able to persist over time,

being deployed on behalf of a person or other system,

having ability to interact with other agents,

being proactive,

having dialogue capabilities, and

being able to affect the real world.

Franklin and Graesser turned these qualities into a simple list that agents, and thus AI agents, are required to possess if they are to be considered agents at all.

Every agent must meet the first four criteria of being Reactive, Autonomous, Goal-oriented and Temporally Continuous. The other categories help define agents more granularly. Agents can also be classified by the nature of their function, such as a planning agent or research agent.

What do AI agents do?

In Michael Wooldridge’s 2009 book, An Introduction to MultiAgent Systems, he builds on the above definition and provides a simple and memorable framework for what agents do.

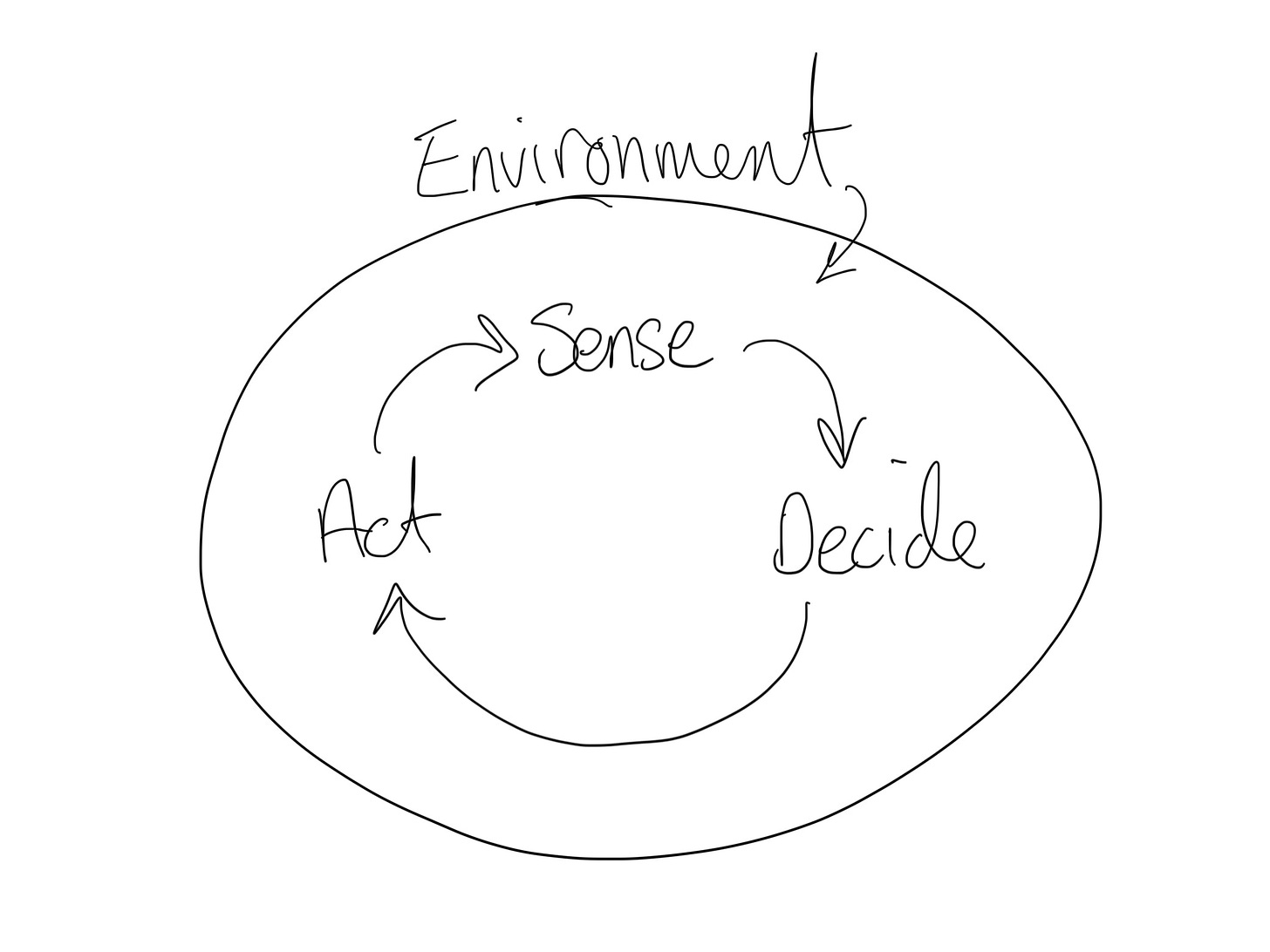

Agents Sense, Decide and Act within their Environment to fulfil a given agenda.

An environment is the space within which an agent operates. For example, an AI agent that’s responsible for finding and booking meetings with multiple people based on their availability, will exist in an environment consisting of calendars, scheduling tools, email systems, and potentially messaging platforms. This environment provides the data and resources the agent interacts with, such as availability details, user preferences, and communication channels, enabling it to perform its task effectively.

The scheduling agent might Sense the availability of participants by accessing their calendars, Decide on the optimal meeting time based on overlapping free slots and preferences, then Act by sending calendar invites and notifying participants of the scheduled meeting.

This behaviour happens in a loop because agents can continue to operate after the task has been fulfilled. For example, let’s say that one participant declines the meeting, the agent needs to then Sense this has occurred, Decide what to do next, then Act on that decision. This could be to go back to the schedule to find the next available free slot for everyone and rearrange the meeting so that everyone can attend.

AI Agent Architecture

An AI agent’s architecture is designed to enable it to perceive its environment, take actions, and learn from its experiences. At its core, the architecture typically consists of everything Wooldridge mentions above. A combination of sensors (Sense), actuators (Act), and a control system (Decide).

The sensors allow the agent to gather data from its environment, while the actuators enable it to perform actions based on the decisions made by the control system.

The control system is the brain of the AI agent. It processes the perceptions gathered by the sensors, makes decisions, and sends commands to the actuators. This system can be quite complex, incorporating various algorithms and models to ensure the agent operates effectively and efficiently.

When you think of generative AI agents, it's the large language models that typically act as the control system; taking the inputs and reasoning/planning on what actions should be taken. Depending on the agent, the large language model might also be the actuator, provided that function calling is being taken care of in the LLM itself.

The classification of types of AI agents

AI agents can be classified into different types based on their architecture:

Reactive agents respond to their environment based on their current state. They are designed to react to stimuli in real-time, making them suitable for tasks that require immediate responses.

Model-based agents use a model of the environment to make decisions. This allows them to predict future states and plan their actions accordingly.

Goal-based agents take it a step further by having a set of goals and using a plan to achieve them. These agents are designed to pursue specific objectives, making decisions that align with their goals. This type of architecture is particularly useful for complex tasks that require strategic planning and execution.

Understanding the architecture of AI agents is crucial for developing systems that can effectively interact with their environment, make informed decisions, and continuously improve their performance.

Generative AI agents are more often than not Reactive, like a chatbot, or Goal-based, like a travel planner. However, that's not exclusively the case.

How do AI Agents make decisions?

Agentic AI operates with sophisticated decision-making algorithms to select the best course of action based on their perceptions and goals. These algorithms can be classified into different categories, each with its own approach to decision-making.

Rule-based. Almost every definition of ‘agent’ that you’ll come across will state that rule-based applications qualify as agents. That algorithms relying on a set of predefined rules to make decisions are on the bottom end of the ‘agenticness’ scale, and that agents will use rules for simple tasks. However, rule-based agents don’t exercise control over their actions, do simply act in response to their environment and are very often scripted. Therefore, according to the accepted industry definition of ‘agent’, rule-based systems don’t qualify, even if your AI vendor says they do. Most of the tech vendors that provide generative AI capabilities include rule-based applications in their definition, and all of the so-called agentic AI platforms have a place to define rules (or 'guides', as they're often called). I’ll reiterate again, that agents that follow pre-defined rules are contested by researchers in the multi-agent systems field as not being agents, but rather programs.

Model-based algorithms, on the other hand, use a model of the environment to make decisions. This allows the agent to simulate different scenarios and predict the outcomes of various actions. By doing so, the agent can choose the action that is most likely to achieve its goals.

Utility-based algorithms take a different approach by using a utility function to evaluate the desirability of different actions. This function assigns a value to each possible action based on how well it aligns with the agent’s goals. The agent then selects the action with the highest utility, ensuring that it consistently makes decisions that maximise its effectiveness.

In addition to these traditional algorithms, AI agents can also use machine learning algorithms to learn from their experiences and improve their decision-making abilities over time to continue to handle more complex tasks. By analysing past actions and their outcomes, the agent can identify patterns and adjust its decision-making process accordingly. This enables the agent to become more effective and efficient as it gains more experience.

Do the generative AI tech companies share the proper definition of AI agent?

Most of you reading this aren’t researchers. You aren’t scrolling through the depths of the AI publishing archives to figure out what’s what. Most people get their information about AI from the media, which gets most of its information from the leading providers of the solutions you use every day. The Google’s, Microsoft’s and OpenAI’s of the world. So how do they define AI agents and is it consistent with the proper definition? Let’s take a look.

Google’s definition of AI agents

According to this video by Google, agents are too hard to define and definitions vary widely. So rather than defining it, it talks about applications having degrees of ‘agenticness’.

Research did support this perspective. Russel and Norvig in 1995 stated that:

“The notion of an agent is meant to be a tool for analyzing systems, not an absolute characterization that divides the world into agents and non-agents."

However, Franklin and Graesser nullified this statement in their definition by including the sub categories of agents in order to at least put a belt around the trousers to prevent them from falling down. So, it’s not actually the case that agentic AI can be anything and everything. It’s still confined to the definition proposed above.

Google also refers to rule-based systems as being agentic. Even in the Vertex Agent Builder platform, if you want to build a ‘chat agent’, then Google suggests you use DialogueFlow CX. DialogFlow does have integrations with Gemini, but it’s far from what I’d consider an agentic AI platform.

Google, for me, doesn’t properly grasp the definition of agent and is skewing its definition in favour of the technology it has to offer.

Microsoft’s definition of AI agents

In Microsoft’s Sharepoint agent demo video, it references one example of an agent being to search documents. Therefore, by this logic, any retrieval augmented generation (RAG) system is by virtue an agent, according to Microsoft.

Microsoft CEO, Satya Nedella, in his Ignite 2024 keynote, referenced all kinds of use cases for AI agents. From meeting facilitators to financial planners to slide deck creators and document writers. Callie August followed Satya and said that “Agents range from simple prompt and response to fully autonomous”, referring to a scale of ‘agenticness’, similar to Google.

It’s not like Microsoft doesn’t get it, though. If you hear Satya Nadella talking about the potential of AI agents on the BG podcast, then it’s clear that he understands the concept deeply. I suspect that because of the current stage of adoption and tooling on offer, Microsoft is widening its definition to encapsulate all of the capabilities it has, even if some of them don’t meet the standard accepted definition of ‘agentic’.

Amazon’s definition of AI agents

Amazon describes AI agents a little more aligned to the accepted definition:

“An artificial intelligence (AI) agent is a software program that can interact with its environment, collect data, and use the data to perform self-determined tasks to meet predetermined goals.”

“An AI agent independently chooses the best actions it needs to perform to achieve those goals.”

The only thing missing here is the concept of persistence and that the actions an agent performs can and will impact what it then senses in the future, but this definition from Amazon is pretty good.

OpenAI’s definition of AI agents

Open AI defines AI agents, according to a research paper, Practices for Governing Agentic AI Systems, as:

“Agentic AI systems are characterized by the ability to take actions which consistently contribute towards achieving goals over an extended period of time, without their behavior having been specified in advance.”

This definition is light, but a good start. It references the temporal continuity requirement of an agent, and alludes to the lack of rules by stating how agents don’t have their behaviour specified in advance.

OpenAI too however, refers to agency as a scale, ranging from more to less agentic.

“We define the degree of agenticness in a system as “the degree to which a system can adaptably achieve complex goals in complex environments with limited direct supervision.”

At least it’s consistent with its understanding of the requirement for agents to achieve goals in complex environments without (or with limited) supervision, and it does propose some confines around what it means to be agentic, but as mentioned, the concept of a scale of agenticness is contested.

To qualify as being agentic, according to OpenAI, a system must meet each of the following criteria:

Be goal-oriented

Exist in a complex environment

Be able to adapt

Execute independently

The degree to which a system has agency then depends on the complexity of the goals and environment, the degree to which it can adapt, and how independent the system is. I’d say this is an acceptable definition.

LangChain’s definition of AI agents

LangChain defines AI agents as:

“a system that uses an LLM to decide the control flow of an application.”

While, in generative AI agents, the LLM does play the Reacting role (sensing and acting), an agent, as we’ve discussed, does more than that.

It also states that there’s a spectrum of ‘agentic’ systems, ranging from hard coded rule-based logic, to fully autonomous end-to-end generative applications.

While this is on the right lines, as mentioned, we have to be careful about including hard-coded, rule-based workflows in the category of agents. They are programs, as discussed.

The LangChain article concludes with the notion, similar to Google that, actually, agents can’t properly be defined because they are so varied, which we’ve already proven isn’t the case.

Anthropic’s definition of agentic AI

Anthropic defines agentic AI as an umbrella term, much like Google and LangChain, though with less sub categories.

According to Anthropic, underneath the agentic term sits two types of agentic systems:

Workflows, which it describes as “systems where LLMs and tools are orchestrated through predefined code paths”

Agents, which are describes as “systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks”.

Again, the workflow definition of agent doesn’t jive with the accepted definition, and the latter definition of agents lacks depth, but it’s on the right lines.

In Summary

I started this piece by complaining about how the term agentic AI is being bandied around without consideration for what it actually is. This is largely because, if you believe the hype, nobody knows what it actually is. Even the big tech companies that are creating this foundational technology broaden its definition to match the technology they sell, rather than the accepted industry definition. This leaves us in a situation predicted in 1995 by Michael Wooldridge, which is that the term is now just noise.

This is a huge shame because the potential of agentic AI is great, especially in enabling businesses to begin to consider automating some of the complex processes and procedures that have thus far been off-limits, including potentially replacing some of the legacy incumbent technologies that have held them back for the last 10 or more years. I’ll write about this further in a coming piece, as well as clearing up some of the confusion between agentic AI and chatbots, conversational AI and other terms that are now getting thrown into the bucket of confusion.

The reality is, though, that some people do know what angentic AI actually is, and you’re now one of them. You now know that agentic AI isn’t new. It’s not something that’s come from the marketing department at OpenAI. It’s something that has existed since the 70s, only 20 years after the term Artificial Intelligence was first coined. You now know the qualities of an agentic system. You know the difference between rule-based workflow programs and agentic systems. You can now smell the bullsh*t on the marketing collateral. And you can now, perhaps, consider how your own AI agents might find a home in your organisation in future.

I want to give a special mention to Dagmar Monett for pointing me in the direction of some of the resources above and for giving me a place to start in researching this.

And if you’d like to figure out how agentic AI, or AI in general, can benefit your organisation, then let’s grab a coffee.

Kane, super helpful article to cut through the BS and marketing hype. It may be my simple brain having to take a step back, but surely what's important is actually understanding what my business goals are, metrics I'm looking to achieve and why etc etc then figuring out from there what technology/AI I need to implement to achieve those. Are many organisations even ready to get to the level of true Agentic AI/AI agents as per the true definition? Or would other well implemented AI tools actually suffice? Surely it's not just a blanket approach as per some marketing, but actually use case and business value based...

Kane, I really appreciate the effort that you took researching the origins and good definitions for the term. A sad reality is that we won't be able to get rid of the current broad and (deliberately) vague definitions out there, as their purpose is to sell and to some degree it feels like "old wine, in new bottles". So yes, "the term is now just noise".

I feel reminded of the "levels of autonomy" discussion for self-driving cars. Level one being assistance e.g. for lane centering or adaptive cruise control - which does not feel autonomous, but is pretty much a combination of AI for detection and rules to steer or inform.

Interestingly, the "agentic" talk within the Conversational AI space will not be able to live up to the definition based on the property table provided by Franklin and Graesser. Autonomy, temporal continuity (which to me would also relate to sensing, e.g. finding the right time when to proactively engage) and adaptability are not (yet) part of their architecture or feature-set. And while the move from rule-based decision points to more autonomous, agentic "guides" seems to be a logical step forward, it will need to prove its value. Looking forward to your next article!

When Blockchain and Distributed Ledgers were all the hype, a lot of people were attracted by the wild claims and messages of what big change this could create. A lot of them had little experience on how to build successful products or how things actually work in the real world. So the idea, to store everything in this immutable shared, distributed database seemed revolutionary. But it could only handle a fraction of the transaction volume at very high costs. So 10 years in, we have not seen any remarkable innovations other than currency. I do see parallels here. Maybe there is a possibility to create a system that offers some degree of flexibility, autonomy that so far only a human-agent was able to deliver, but the costs for design and running the system, plus the inference-time might mean it will never be more than an experiment. For 99% of the businesses the challenges and the solutions might be in a different space: how can I get 80% automated with 20% of the effort.