Part 2 of Where LLMs Actually Belong in Enterprise Chatbots

Part two in a series exploring the most optimum use cases for generative AI in enterprise conversational AI applications. This time, knowledge search and response generation.

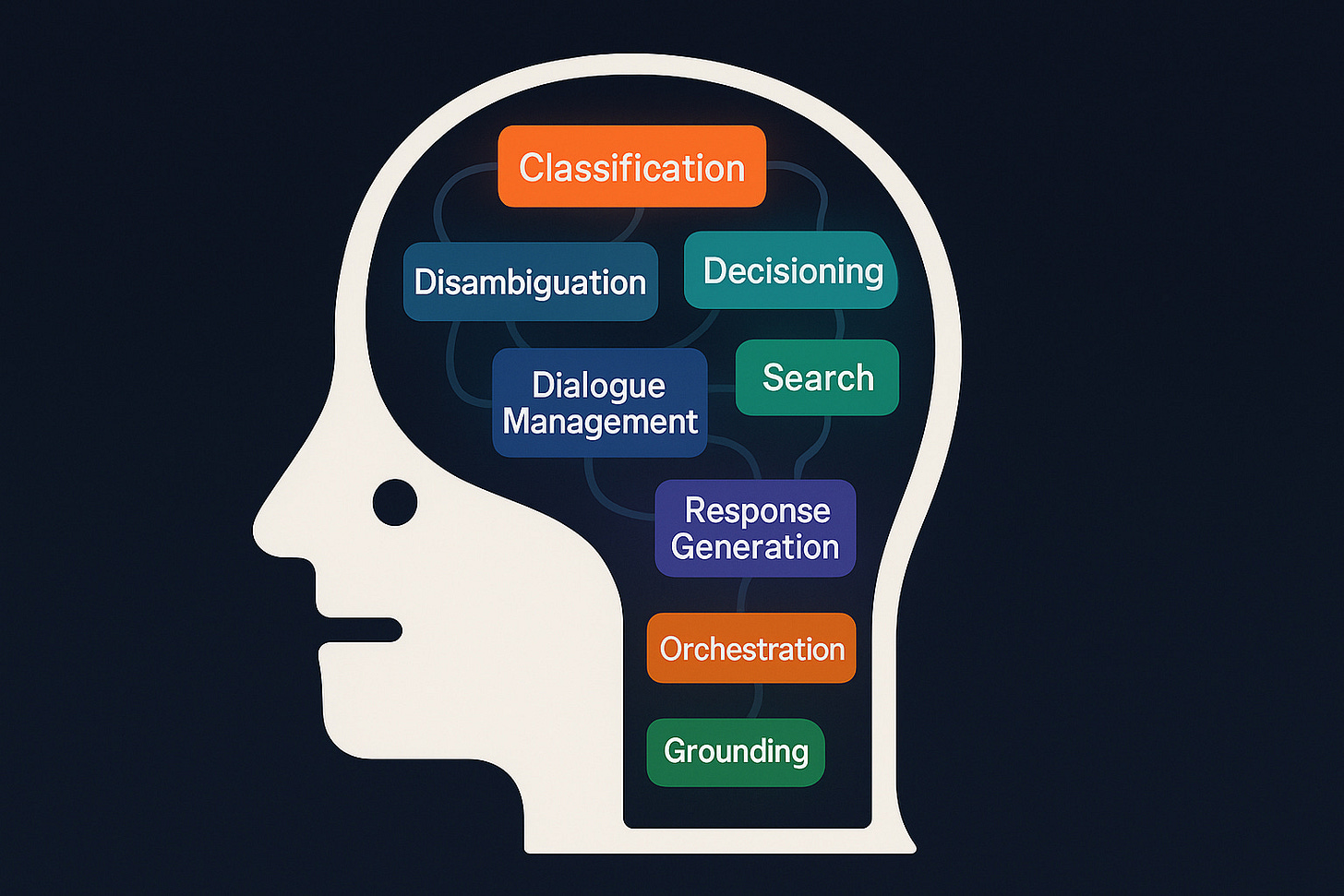

In the previous article in this series, we looked at the first area that LLMs are useful in enterprise AI chatbots: Understanding. Understanding is concerned with two primary tenants: classification and disambiguation. In this next article, we’ll look at the next two components that generative AI handles particularly well: search and response generation.

Search/Knowledge retrieval

I referred, in the last article to the messiness of language. I’ve discussed this in detail with Alan Nichol, CTO, Rasa, on the VUX World podcast, and how this messiness makes it very difficult for an NLU system to handle the wide variety, and high volume of different types of user utterances.

Utterances predominantly come in two forms: questions and answers. Either, your user is asking a question (or making a statement with the subtext of a question) or they’re responding to a question that your AI agent has asked of them.

The nuances in the questions asked by users are often subtle, and this subtlety trips up NLU systems consistently, misclassifying or disambiguating or just not understanding at all.

Given the maturity we have seen in retrieval augmented generation (RAG) over the past two years, this is a fruitful place for large language models to shine.

To get the most out of generative AI search and answer-generation, its typically implemented in one of two ways:

1: End-to-end RAG.

This is where your entire chatbot takes on the form of a front-end to a retrieval augmented generation pipeline. Every user utterance is fed through the pipeline, where content is retrieved and answers summarised.

This might have the illusion of progress because it brings the LLM into play immediately, draws on its strengths of understanding and language generation, and removes pretty much all instances of “I’m sorry I didn’t get that” responses.

However, it comes with the inability to actually do anything. Users come to chatbots not only to get answers to questions, but to accomplish tasks. RAG, on it’s own, suffers from what I call the Full Stop Problem, which is a lack of understanding about what a conversation is, the difference between a question and an action, and the context of the user journey.

2: NLU first, RAG fallback

For those that have existing chatbots that are already moderately well performing, another use case is to keep your NLU system where it is, and devolve to a RAG solution if and when you fail to classify an utterance. This is the way that NatWest has approached things.

It rolled out RAG in its mortgage and credit card journeys. Instead of offering a disambiguation menu showing the nearest most probable intents, based on a confidence threshold, it instead offered the user the opportunity to try a generative search. In the beta program, it managed to increase containment from ~35% to 75%+ and the accuracy rate of the solution was 99% (1% hallucination!).

These aren’t the only ways to implement RAG in your chatbots. There are many others. RAG itself is a concept of increasing academic and practical interest. Inbenta, for example, has an agentic RAG implementation and Voiceflow has RAG performing classification instead of NLU. The opportunities are pretty much endless.

Response generation

Part of getting search right isn’t just finding the information, it’s putting together a response, based on the information you retrieve, that actually answers the user’s question. And this is just one type of response that your AI chatbot will need to serve.

You’ll also need to cater for the formulation of questions, the grounding of responses, handling jailbreak attempts and out of scope discussions. You’ll need to recover when things go sideways, use discourse markers to orient users, handle objections, corrections and incomplete responses. Respond to disgruntled users, novices that have never used a chatbot before, and returning power users that expect you to behave like ChatGPT. You’ll need to handle small talk, silence, question marks and ambiguity. General questions, specific questions and ultra-personal questions. You might have use cases that are flippant, risk-free and fast, and others that are highly emotive, extremely important and slow.

All that is to say that a diligently designed experience doesn’t simply throw everything to an LLM and say ‘have at it’. Instead, it uses the LLM for what it’s good at, which is sounding natural, so that you can handle all of the scenarios mentioned above with clarity and grace.

To put this into more solid terms, large language models can be used in response generation in the following few practical examples:

1: Knowledge summarisation

The first is the easiest, and one that requires less explaining, which is the ability to effectively summarise knowledge. LLMs can do this in a way that is specific to the question. Therefore, answers to questions can be a lot more nuanced and more personal when using large language models.

2: Contextual grounding

The second is for something that I call contextual grounding. This is leveraging the dynamic capabilities of language generation to respond to things that are out of scope in your conversation. Things that you do not understand, but rather than saying ‘sorry I didn’t get that’, instead making the response more contextual to the users input.

For example, if you are an insurance company that’s having a conversation with a customer who asks what the weather might be like tomorrow, this would be a conversation that is out of scope for your AI agent.

Normally, an NLU system will fail here and say ‘sorry I didn’t understand that.’ But you can use large language models to create a dynamic response that is contextual, such as “I’m really sorry, but discussing the weather isn’t something I’ve been trained on. I can help you with your insurance queries, however.”

Contextual grounding is a way of failing gracefully while bringing the conversation back into a domain that you can support.

3: Implicit confirmations

A implicit confirmation is where you confirm to the user that you’ve understood what they’ve said, or that you’ve successfully collected some information, without requiring them to explicitly state that the information you have is accurate.

For example, let’s say you’re a travel agent and you’re aiming to find the ideal holiday for your user. You ask the user:

“Do you have an idea of what country you’d like to travel to?”

And they respond: “I’ve been to Ibiza before, that was nice.”

Now, typically here, a traditional chatbot, when it receives that utterance, will be searching for a country entity. Almost everything else is irrelevant. If it spots Ibiza, the chatbot’s response will be something like:

“Ibiza, got it. And how long would you like to stay?”

Here, the chatbot has used an implicit confirmation to tell the user that it’s understood the intended destination is Ibiza, without having the user explicitly confirm.

Aside: An explicit confirmation, by contrast, would look something like this:

User: “I’ve been to Ibiza before, that was nice.”

Chatbot: “You’d like to go to Ibiza, is that right?”

User: “Yes”

Explicit confirmations in the above scenario are overkill. You should use an explicit confirmation in situations where getting it wrong would irreparably effect the conversation.

For example, you’d want to explicitly confirm the amount you’re transferring from one bank account to another. However, you could implicitly confirm the account you’re checking the balance of. End aside.

Large language models can make those implicit confirmations even more natural and unique to each user. For example:

User: “I’ve been to Ibiza before, that was nice.”

LLM Agent: “You’re right, Ibiza IS nice! So how long would you like to go there for?”

Implicit confirmations not only create more personal experiences, but they also amplify the perception of understanding. If you can respond to customers with a contextual response based on their last conversational turn, even if you can’t help them, you can demonstrate that you at least understood them. This is a recipe for great experience.

All of the other response types I mentioned at the outset of this section have potential to leverage the innate language generation capability of large language models to produce more human-like, natural-sounding conversations, and I’d be happy to provide more examples for those that think it’s a worthwhile use of time in sharing.

Response’s cousin, dialogue management

To generate realistic, natural and contextual responses to user queries requires that you have a good grasp on the context and state of the conversation. You have to know what’s happened and what’s going to be offered next. This is all shaped by how the conversation unfolds, how the business process that undergirds it is designed and how you successfully get users from A to B. From initial query to fully resolved use case.

This is the area of dialogue management, and that’s what we’ll cover next.